Python側でのTensorFlowの隆盛を他所に、R側では{tensorflow}も使いにくいし*1これはPythonistaに転生しなければならんのかなぁ。。。ということを思っていたら、出ました。あのKerasのRパッケージです。

インストール手順は普通にhttps://rstudio.github.io/keras/に書いてある通り、以下の通りRコンソールから打てばおしまいです。

devtools::install_github("rstudio/keras") library(keras) install_tensorflow()

ということで、早速色々試してみようと思います。

3層NNをサクッと試してみる

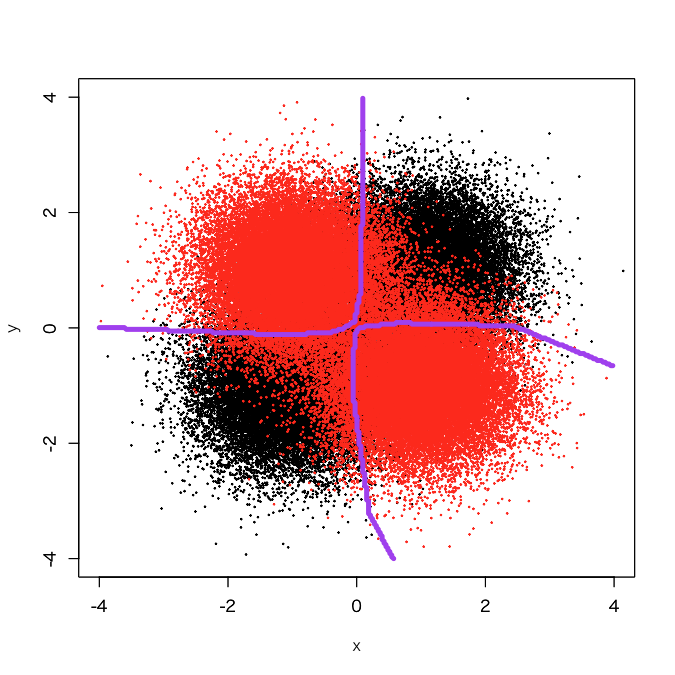

データセットはこちらのXORデータセット(10万サンプル)を使います。

> d <- read.csv('xor_complex_large.txt', header=T, sep='\t') > x_train <- as.matrix(d[,-3]) > y_train <- as.matrix(d[,3]) - 1 > library(keras) > model <- keras_model_sequential() > > model %>% + layer_dense(units = 5, input_shape = 2) %>% + layer_activation(activation = 'relu') %>% + layer_dense(units = 7) %>% + layer_activation(activation = 'relu') %>% + layer_dense(units = 1, activation = 'sigmoid') > > model %>% compile( + loss = 'binary_crossentropy', + optimizer = optimizer_sgd(lr = 0.05), + metrics = c('accuracy') + ) > model %>% fit(x_train, y_train, epochs = 5, batch_size = 100) Epoch 1/5 100000/100000 [==============================] - 2s - loss: 0.3898 - acc: 0.8273 Epoch 2/5 100000/100000 [==============================] - 1s - loss: 0.3381 - acc: 0.8525 Epoch 3/5 100000/100000 [==============================] - 1s - loss: 0.3288 - acc: 0.8557 Epoch 4/5 100000/100000 [==============================] - 2s - loss: 0.3246 - acc: 0.8571 Epoch 5/5 100000/100000 [==============================] - 1s - loss: 0.3233 - acc: 0.8570 > px <- seq(-4,4,0.03) > py <- seq(-4,4,0.03) > x_test <- expand.grid(px, py) > x_test <- as.matrix(x_test) > pred_class <- model %>% predict(x_test, batch_size=100) > pred_class <- round(pred_class, 0) > plot(d[,-3], col=c(rep(1,50000), rep(2,50000)), pch=19, cex=0.2, xlim=c(-4,4), ylim=c(-4,4)) > par(new=T) > contour(px, py, array(pred_class, dim=c(length(px), length(py))), xlim=c(-4,4), ylim=c(-4,4), levels=0.5, col='purple', lwd=5)

当たり前ですが、綺麗なXOR決定境界が引けました。

オンラインニュース人気度データセットで4層のDNNを試してみる

これは以前{mxnet}で試した記事と同じものです。

> d <- read.csv('OnlineNewsPopularity.csv') > d <- d[,-c(1,2)] > idx <- sample(nrow(d),5000,replace=F) > d_train <- d[-idx,] > d_test <- d[idx,] > train <- data.matrix(d_train) > test <- data.matrix(d_test) > train.x <- train[,-59] > train.y <- train[,59] > test.x <- test[,-59] > test.y <- test[,59] > # 正規化 > train_means <- apply(train.x, 2, mean) > train_stds <- apply(train.x, 2, sd) > test_means <- apply(test.x, 2, mean) > test_stds <- apply(test.x, 2, sd) > train.x <- t((t(train.x)-train_means)/train_stds) > test.x <- t((t(test.x)-test_means)/test_stds) > > # 目的変数を対数変換 > train.y <- log(train.y) > test.y <- log(test.y) > > library(keras) > model <- keras_model_sequential() > model %>% layer_dense(units = 200, input_shape=58) %>% + layer_activation(activation = 'sigmoid') %>% + layer_dropout(rate = 0.2) %>% + layer_dense(units = 50) %>% + layer_activation(activation = 'sigmoid') %>% + layer_dropout(rate = 0.2) %>% + layer_dense(units = 44) %>% + layer_activation(activation = 'sigmoid') %>% + layer_dropout(rate = 0.2) %>% + layer_dense(units = 1) %>% + layer_activation(activation = 'linear') %>% + compile( + loss = 'mse', + optimizer = optimizer_rmsprop(lr = 0.001) + ) > model %>% fit(train.x, train.y, epochs = 8, batch_size = 100) Epoch 1/8 34644/34644 [==============================] - 1s - loss: 4.3911 Epoch 2/8 34644/34644 [==============================] - 1s - loss: 1.2735 Epoch 3/8 34644/34644 [==============================] - 1s - loss: 1.1401 Epoch 4/8 34644/34644 [==============================] - 1s - loss: 1.0667 Epoch 5/8 34644/34644 [==============================] - 1s - loss: 1.0511 Epoch 6/8 34644/34644 [==============================] - 1s - loss: 1.0271 Epoch 7/8 34644/34644 [==============================] - 1s - loss: 0.9964 Epoch 8/8 34644/34644 [==============================] - 1s - loss: 1.0003 > > pred_reg <- model %>% predict(test.x, batch_size=100) > library(Metrics) > rmse(pred_reg, test.y) [1] 0.8589696

意外とそこまで悪くない結果になりました。少なくとも{mxnet}でベイズ最適化まで突っ込んで試行錯誤した割にあまり良いスコアにならなかったことを考えると上出来かなと。

ド定番MNISTをDNNでやってみる

もう見たまんまです。これはDNNというよりMLP(Multi-Layer Perceptron: 多層パーセプトロン)と言った方が正しいのだろうとは思いますが。

> train <- read.csv('short_mnist_train.csv', header=T, sep=',') > test <- read.csv('short_mnist_test.csv', header=T, sep=',') > train.x <- as.matrix(train[,-1]/255) > test.x <- as.matrix(test[,-1]/255) > train.y <- train[,1] %>% to_categorical(num_classes = 10) > test.y <- test[,1] %>% to_categorical(num_classes = 10) > library(keras) > model <- keras_model_sequential() > model %>% + layer_dense(units = 128, input_shape=c(784)) %>% + layer_activation(activation = 'relu') %>% + # layer_dropout(rate = 0.5) %>% + layer_dense(units = 64) %>% + layer_activation(activation = 'relu') %>% + # layer_dropout(rate = 0.5) %>% + layer_dense(units = 10) %>% + layer_activation(activation = 'softmax') %>% + compile( + loss = 'categorical_crossentropy', + optimizer = optimizer_sgd(lr = 0.07, decay = 1e-6, momentum = 0.9, nesterov = TRUE) + ) > t <- proc.time() > model %>% fit(train.x, train.y, epochs = 10, batch_size = 100) Epoch 1/10 5000/5000 [==============================] - 0s - loss: 0.7911 Epoch 2/10 5000/5000 [==============================] - 0s - loss: 0.2737 Epoch 3/10 5000/5000 [==============================] - 0s - loss: 0.1813 Epoch 4/10 5000/5000 [==============================] - 0s - loss: 0.1218 Epoch 5/10 5000/5000 [==============================] - 0s - loss: 0.0807 Epoch 6/10 5000/5000 [==============================] - 0s - loss: 0.0583 Epoch 7/10 5000/5000 [==============================] - 0s - loss: 0.0358 Epoch 8/10 5000/5000 [==============================] - 0s - loss: 0.0238 Epoch 9/10 5000/5000 [==============================] - 0s - loss: 0.0162 Epoch 10/10 5000/5000 [==============================] - 0s - loss: 0.0104 > proc.time() - t ユーザ システム 経過 5.216 0.538 3.332 > pred_class <- model %>% predict(test.x, batch_size=100) > pred_label <- t(max.col(pred_class)) > table(test[,1], pred_label) pred_label 1 2 3 4 5 6 7 8 9 10 0 94 0 0 0 0 1 2 1 1 1 1 0 97 2 0 0 0 0 0 1 0 2 0 0 96 1 0 0 1 2 0 0 3 0 1 2 91 0 3 0 0 2 1 4 0 0 0 0 95 0 2 1 0 2 5 0 1 0 1 0 95 2 0 1 0 6 0 0 0 0 0 2 97 0 1 0 7 0 0 0 0 1 0 0 96 0 3 8 0 0 1 1 1 0 0 0 97 0 9 0 0 0 0 2 1 1 1 0 95 > sum(diag(table(test[,1], pred_label)))/nrow(test) [1] 0.953 # seedを決めていないので多少変動するはず

一応、それなりには走ります。またそれなりの精度にはなります。ちなみに、{mxnet}で全く同じネットワークを試してみるとこうなります。

> train<-data.matrix(train) > test<-data.matrix(test) > train.x<-train[,-1] > train.y<-train[,1] > train.x<-t(train.x/255) > test_org<-test > test<-test[,-1] > test<-t(test/255) > > library(mxnet) > data <- mx.symbol.Variable("data") > fc1 <- mx.symbol.FullyConnected(data, name="fc1", num_hidden=128) > act1 <- mx.symbol.Activation(fc1, name="relu1", act_type="relu") > fc2 <- mx.symbol.FullyConnected(act1, name="fc2", num_hidden=64) > act2 <- mx.symbol.Activation(fc2, name="relu2", act_type="relu") > fc3 <- mx.symbol.FullyConnected(act2, name="fc3", num_hidden=10) > softmax <- mx.symbol.SoftmaxOutput(fc3, name="sm") > devices <- mx.cpu() > mx.set.seed(0) > t <- proc.time() > model <- mx.model.FeedForward.create(softmax, X=train.x, y=train.y, + ctx=devices, num.round=10, array.batch.size=100, + learning.rate=0.07, momentum=0.9, eval.metric=mx.metric.accuracy, + initializer=mx.init.uniform(0.07), + epoch.end.callback=mx.callback.log.train.metric(100)) Start training with 1 devices [1] Train-accuracy=0.470204081632653 [2] Train-accuracy=0.8326 [3] Train-accuracy=0.9052 [4] Train-accuracy=0.9278 [5] Train-accuracy=0.9466 [6] Train-accuracy=0.9568 [7] Train-accuracy=0.9646 [8] Train-accuracy=0.9756 [9] Train-accuracy=0.9888 [10] Train-accuracy=0.9926 > > proc.time() - t ユーザ システム 経過 2.540 0.601 1.718 > preds <- predict(model, test, ctx=devices) > pred.label <- max.col(t(preds)) - 1 > table(test_org[,1],pred.label) pred.label 0 1 2 3 4 5 6 7 8 9 0 94 0 1 0 0 0 3 0 1 1 1 0 100 0 0 0 0 0 0 0 0 2 0 0 97 1 1 0 0 1 0 0 3 0 0 2 91 0 3 0 1 2 1 4 0 0 0 0 95 0 2 1 0 2 5 0 1 0 1 0 96 0 0 2 0 6 1 0 0 0 1 3 94 0 1 0 7 0 0 0 0 0 0 0 100 0 0 8 0 0 1 1 1 1 0 0 96 0 9 0 0 0 0 6 1 0 4 0 89 > sum(diag(table(test_org[,1],pred.label)))/1000 [1] 0.952

あれれ、{mxnet}の方が精度は同じくらいなのに2秒以上速いですね(笑)。これはPythonバージョンのKerasだとちょっと考えにくい感じかなぁと。

MNISTのCNNをやろうとしたらコケた話

最後に、MNISTのCNNをやってみようと思います。なのですが。。。

library(keras) train <- read.csv('short_mnist_train.csv', header=T, sep=',') test <- read.csv('short_mnist_test.csv', header=T, sep=',') x_train <- array(train[,-1]/255, dim=c(5000, 28, 28, 1)) y_train <- train[,1] %>% to_categorical(num_classes = 10) x_test <- array(test[,-1]/255, dim=c(1000, 28, 28, 1)) y_test <- test[,1] %>% to_categorical(num_classes = 10) # create model model <- keras_model_sequential() model %>% layer_conv_2d(filters = 32, kernel_size = c(3,3), activation = 'relu', input_shape = c(28,28, 1)) %>% layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>% layer_max_pooling_2d(pool_size = c(2,2)) %>% layer_dropout(rate = 0.25) %>% layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>% layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>% layer_max_pooling_2d(pool_size = c(2,2)) %>% layer_dropout(rate = 0.25) %>% layer_flatten() %>% layer_dense(units = 128, activation = 'relu') %>% layer_dropout(rate = 0.25) %>% layer_dense(units = 10, activation = 'softmax') %>% compile( loss = 'categorical_crossentropy', optimizer = optimizer_sgd(lr = 0.01, decay = 1e-6, momentum = 0.9, nesterov = TRUE) ) # train model %>% fit(x_train, y_train, batch_size = 32, epochs = 10) # evaluate score <- model %>% evaluate(x_test, y_test, batch_size = 32)

これで走るはずなんですが。。。出来上がったx_train, x_testのarrayの容量を見たら147GB, 6GBもあってローカルでは走らないと判明orz せめて{Matrix}のsparse.model.matrix使わせて欲しいなぁと。。。もしくはFAQで推奨されているtrain_on_batchを使うとか、time_distributedで軽量化するとかする必要がありそうです。ちょっとここは詳しい人に解決策教えてもらわないと。。。

追記1:ExampleのMNIST x CNNの例は走りました

ExampleにMNIST x CNNの例が載っているんですが、こちらは確かに走りました。

library(keras) batch_size <- 128 num_classes <- 10 epochs <- 12 # input image dimensions img_rows <- 28 img_cols <- 28 # the data, shuffled and split between train and test sets mnist <- dataset_mnist() x_train <- mnist$train$x y_train <- mnist$train$y x_test <- mnist$test$x y_test <- mnist$test$y x_train <- array(as.numeric(x_train), dim = c(dim(x_train)[[1]], img_rows, img_cols, 1)) x_test <- array(as.numeric(x_test), dim = c(dim(x_test)[[1]], img_rows, img_cols, 1)) input_shape <- c(img_rows, img_cols, 1) x_train <- x_train / 255 x_test <- x_test / 255 cat('x_train_shape:', dim(x_train), '\n') cat(dim(x_train)[[1]], 'train samples\n') cat(dim(x_test)[[1]], 'test samples\n') # convert class vectors to binary class matrices y_train <- to_categorical(y_train, num_classes) y_test <- to_categorical(y_test, num_classes) # define model model <- keras_model_sequential() model %>% layer_conv_2d(filters = 32, kernel_size = c(3,3), activation = 'relu', input_shape = input_shape) %>% layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>% layer_max_pooling_2d(pool_size = c(2, 2)) %>% layer_dropout(rate = 0.25) %>% layer_flatten() %>% layer_dense(units = 128, activation = 'relu') %>% layer_dropout(rate = 0.5) %>% layer_dense(units = num_classes, activation = 'softmax') # compile model model %>% compile( loss = loss_categorical_crossentropy, optimizer = optimizer_adadelta(), metrics = c('accuracy') ) # train and evaluate model %>% fit( x_train, y_train, batch_size = batch_size, epochs = epochs, verbose = 1, validation_data = list(x_test, y_test) ) scores <- model %>% evaluate( x_test, y_test, verbose = 0 ) cat('Test loss:', scores[[1]], '\n') cat('Test accuracy:', scores[[2]], '\n')

Train on 60000 samples, validate on 10000 samples Epoch 1/12 2017-06-03 14:11:45.945824: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-03 14:11:45.945842: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-03 14:11:45.945848: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations. 2017-06-03 14:11:45.945853: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-03 14:11:45.945859: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations. 60000/60000 [==============================] - 197s - loss: 0.3336 - acc: 0.8989 - val_loss: 0.0789 - val_acc: 0.9761 Epoch 2/12 60000/60000 [==============================] - 197s - loss: 0.1136 - acc: 0.9667 - val_loss: 0.0554 - val_acc: 0.9818 Epoch 3/12 60000/60000 [==============================] - 187s - loss: 0.0853 - acc: 0.9749 - val_loss: 0.0439 - val_acc: 0.9855 Epoch 4/12 60000/60000 [==============================] - 192s - loss: 0.0713 - acc: 0.9793 - val_loss: 0.0389 - val_acc: 0.9873 Epoch 5/12 60000/60000 [==============================] - 179s - loss: 0.0638 - acc: 0.9817 - val_loss: 0.0347 - val_acc: 0.9884 Epoch 6/12 60000/60000 [==============================] - 181s - loss: 0.0549 - acc: 0.9838 - val_loss: 0.0326 - val_acc: 0.9894 Epoch 7/12 60000/60000 [==============================] - 184s - loss: 0.0511 - acc: 0.9849 - val_loss: 0.0309 - val_acc: 0.9889 Epoch 8/12 60000/60000 [==============================] - 182s - loss: 0.0457 - acc: 0.9864 - val_loss: 0.0301 - val_acc: 0.9891 Epoch 9/12 60000/60000 [==============================] - 181s - loss: 0.0437 - acc: 0.9866 - val_loss: 0.0280 - val_acc: 0.9915 Epoch 10/12 60000/60000 [==============================] - 175s - loss: 0.0414 - acc: 0.9878 - val_loss: 0.0283 - val_acc: 0.9908 Epoch 11/12 60000/60000 [==============================] - 182s - loss: 0.0372 - acc: 0.9890 - val_loss: 0.0274 - val_acc: 0.9911 Epoch 12/12 60000/60000 [==============================] - 193s - loss: 0.0377 - acc: 0.9889 - val_loss: 0.0270 - val_acc: 0.9913 > scores <- model %>% evaluate( + x_test, y_test, verbose = 0 + ) > > cat('Test loss:', scores[[1]], '\n') Test loss: 0.0269964 > cat('Test accuracy:', scores[[2]], '\n') Test accuracy: 0.9913

ちゃんとExamplesの説明通り、99%以上の精度が出ています。

追記2:元のMNISTのCNNも走りました

ようやく分かりました。

TJOさんの例でも,x_train <- array(as.matrix(train[,-1]/255), dim=c(5000, 28, 28, 1))などとすると正しい配列ができて動くようです.私も普段あまり配列は使わないので,こういうのは地味にハマりポイントですね....

— nakamichi (@__nakamichi__) 2017年6月3日

ということで、arrayに渡す時にas.matrixするとtrainで30MBぐらい&testで6MBぐらいということで、ちゃんとまともな容量のarrayになって回るようです。ごめんなさいorz

library(keras) train <- read.csv('short_mnist_train.csv', header=T, sep=',') test <- read.csv('short_mnist_test.csv', header=T, sep=',') x_train <- array(<span style="color: #ff0000">as.matrix</span>(train[,-1]/255), dim=c(5000, 28, 28, 1)) y_train <- train[,1] %>% to_categorical(num_classes = 10) x_test <- array(<span style="color: #ff0000">as.matrix</span>(test[,-1]/255), dim=c(1000, 28, 28, 1)) y_test <- test[,1] %>% to_categorical(num_classes = 10) # create model model <- keras_model_sequential() model %>% layer_conv_2d(filters = 32, kernel_size = c(3,3), activation = 'relu', input_shape = c(28,28, 1)) %>% layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>% layer_max_pooling_2d(pool_size = c(2,2)) %>% layer_dropout(rate = 0.25) %>% layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>% layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>% layer_max_pooling_2d(pool_size = c(2,2)) %>% layer_dropout(rate = 0.25) %>% layer_flatten() %>% layer_dense(units = 128, activation = 'relu') %>% layer_dropout(rate = 0.25) %>% layer_dense(units = 10, activation = 'softmax') %>% compile( loss = 'categorical_crossentropy', optimizer = optimizer_sgd(lr = 0.01, decay = 1e-6, momentum = 0.9, nesterov = TRUE) ) # train model %>% fit(x_train, y_train, batch_size = 32, epochs = 10) # evaluate score <- model %>% evaluate(x_test, y_test, batch_size = 32)

Epoch 1/10 5000/5000 [==============================] - 20s - loss: 1.1239 Epoch 2/10 5000/5000 [==============================] - 25s - loss: 0.2766 Epoch 3/10 5000/5000 [==============================] - 23s - loss: 0.1871 Epoch 4/10 5000/5000 [==============================] - 21s - loss: 0.1420 Epoch 5/10 5000/5000 [==============================] - 23s - loss: 0.1142 Epoch 6/10 5000/5000 [==============================] - 23s - loss: 0.1089 Epoch 7/10 5000/5000 [==============================] - 22s - loss: 0.0929 Epoch 8/10 5000/5000 [==============================] - 22s - loss: 0.0819 Epoch 9/10 5000/5000 [==============================] - 22s - loss: 0.0674 Epoch 10/10 5000/5000 [==============================] - 21s - loss: 0.0616 > > # evaluate > score <- model %>% evaluate(x_test, y_test, batch_size = 32) 992/1000 [============================>.] - ETA: 0s > score [1] 0.05340803 > pred_class <- model %>% predict(x_test, batch_size=100) > pred_label <- t(max.col(pred_class)) > table(test[,1], pred_label) pred_label 1 2 3 4 5 6 7 8 9 10 0 98 0 0 0 0 0 2 0 0 0 1 0 100 0 0 0 0 0 0 0 0 2 0 0 99 0 0 0 0 1 0 0 3 0 0 0 100 0 0 0 0 0 0 4 0 2 0 0 97 0 1 0 0 0 5 0 0 0 0 0 100 0 0 0 0 6 1 0 0 0 0 2 97 0 0 0 7 0 0 0 1 0 0 0 99 0 0 8 0 1 1 1 0 1 0 0 96 0 9 0 0 0 0 1 1 0 1 0 97 > sum(diag(table(test[,1], pred_label)))/nrow(test) [1] 0.983

ちゃんと回りました。スコアも学習5000行、テスト1000行にしては悪くない結果になっていると思います。なお、これとほぼ同じ?ネットワークを{mxnet}で作って回してみたら。。。何故か同じ畳み込み層の連続&学習係数&ミニバッチ数&エポック数では全然学習しないので、ちょっといじってありますがこんな感じになります。

> train <- read.csv('https://github.com/ozt-ca/tjo.hatenablog.samples/raw/master/r_samples/public_lib/jp/mnist_reproduced/short_prac_train.csv') > test <- read.csv('https://github.com/ozt-ca/tjo.hatenablog.samples/raw/master/r_samples/public_lib/jp/mnist_reproduced/short_prac_test.csv') > train<-data.matrix(train) > test<-data.matrix(test) > train.x<-train[,-1] > train.y<-train[,1] > train.x<-t(train.x/255) > test_org<-test > test<-test[,-1] > test<-t(test/255) > > library(mxnet) > > data <- mx.symbol.Variable("data") > devices<-mx.cpu() > # first conv > conv1 <- mx.symbol.Convolution(data=data, kernel=c(3,3), num_filter=32) > tanh1 <- mx.symbol.Activation(data=conv1, act_type="relu") > pool1 <- mx.symbol.Pooling(data=tanh1, pool_type="max", + kernel=c(2,2), stride=c(2,2)) > drop1 <- mx.symbol.Dropout(data=pool1,p=0.5) > # second conv > conv2 <- mx.symbol.Convolution(data=drop1, kernel=c(3,3), num_filter=64) > tanh2 <- mx.symbol.Activation(data=conv2, act_type="relu") > pool2 <- mx.symbol.Pooling(data=tanh2, pool_type="max", + kernel=c(2,2), stride=c(2,2)) > drop2 <- mx.symbol.Dropout(data=pool2,p=0.5) > # first fullc > flatten <- mx.symbol.Flatten(data=drop2) > fc1 <- mx.symbol.FullyConnected(data=flatten, num_hidden=128) > tanh4 <- mx.symbol.Activation(data=fc1, act_type="relu") > drop4 <- mx.symbol.Dropout(data=tanh4,p=0.5) > # second fullc > fc2 <- mx.symbol.FullyConnected(data=drop4, num_hidden=10) > # loss > lenet <- mx.symbol.SoftmaxOutput(data=fc2) > train.array <- train.x > dim(train.array) <- c(28, 28, 1, ncol(train.x)) > test.array <- test > dim(test.array) <- c(28, 28, 1, ncol(test)) > mx.set.seed(71) > devices <- mx.cpu() > tic <- proc.time() > model <- mx.model.FeedForward.create(lenet, X=train.array, y=train.y, + ctx=devices, num.round=30, array.batch.size=100, + learning.rate=0.05, momentum=0.9, wd=0.00001, + eval.metric=mx.metric.accuracy, + epoch.end.callback=mx.callback.log.train.metric(100)) Start training with 1 devices [1] Train-accuracy=0.0965306122448979 [2] Train-accuracy=0.0892 [3] Train-accuracy=0.0886 [4] Train-accuracy=0.0888 [5] Train-accuracy=0.0878 [6] Train-accuracy=0.0876 [7] Train-accuracy=0.0882 [8] Train-accuracy=0.089 [9] Train-accuracy=0.0924 [10] Train-accuracy=0.1272 [11] Train-accuracy=0.4322 [12] Train-accuracy=0.676 [13] Train-accuracy=0.7794 [14] Train-accuracy=0.8334 [15] Train-accuracy=0.8672 [16] Train-accuracy=0.8854 [17] Train-accuracy=0.9046 [18] Train-accuracy=0.912 [19] Train-accuracy=0.9182 [20] Train-accuracy=0.9234 [21] Train-accuracy=0.925 [22] Train-accuracy=0.9336 [23] Train-accuracy=0.9342 [24] Train-accuracy=0.933 [25] Train-accuracy=0.9442 [26] Train-accuracy=0.9402 [27] Train-accuracy=0.9384 [28] Train-accuracy=0.9502 [29] Train-accuracy=0.9466 [30] Train-accuracy=0.9468 > print(proc.time() - tic) ユーザ システム 経過 528.029 3.727 475.477 > preds <- predict(model, test.array, ctx=devices) > pred.label <- max.col(t(preds)) - 1 > table(test_org[,1],pred.label) pred.label 0 1 2 3 4 5 6 7 8 9 0 98 0 0 0 0 0 2 0 0 0 1 0 100 0 0 0 0 0 0 0 0 2 0 0 97 2 0 0 0 1 0 0 3 0 0 0 99 0 0 0 0 1 0 4 0 2 0 0 96 0 0 1 0 1 5 0 1 0 0 0 99 0 0 0 0 6 0 0 0 0 0 1 98 0 1 0 7 0 0 0 0 0 0 0 100 0 0 8 0 2 1 0 1 0 0 0 96 0 9 0 0 0 0 1 0 0 2 0 97 > sum(diag(table(test_org[,1],pred.label)))/1000 [1] 0.98

{keras}が222秒でACC 0.983だったのに対して、{mxnet}は528秒でACC 0.98。同じSGDで回してるはずなんですが({mxnet}だとデフォルトはSGD)、ちょっと結構な差がついた気がします。

感想

(追記前の感想)

同じことをやっても{mxnet}の方が{keras}より速いし、何と言っても{keras}でCNN回そうとするとshort version MNISTでもOOMでクラッシュするし。。。{mxnet}ならバカでっかいarrayにしなくても自前で2次元に変換して畳み込みやってくれるし。。。ということで、RでKerasを使うのはあまり相性が良くないんじゃないかと思った次第です。

ということで実際に回り切ったのを確認した上で改めて感想を書くと、全く同じネットワークを組んで比較した感じだと(実はPython側でKerasを触っていた時も思っていましたが){keras}の方が学習効率も良く高精度のモデルが組み上がる印象があります。ただ、RっぽさのあるところとRっぽくないところが混在している感じで、若干やりにくいなぁ感が。。。

(追記前の感想)

やっぱりKerasはPythonでTensorFlowバックエンドで動かして何ぼだと思いますので、KerasでDeep Learningやりたい人はPythonでやりましょう。お粗末様でしたm(_ _)m

この感想は正直言って変わらないですorz ぶっちゃけ単なるラッパーとしてではなく、R向けにソースから書き直してくれたらいいなぁと思ったんですが、それはTensorFlowのR版を作るというのと同じことなので過剰な要望かなぁと。。。ということで、やはり皆さんPythonもしっかり勉強しましょう(汗)。お疲れ様でした。